Last month, I wrote about some applications online gaming companies can take from the world of operations analytics, which are primarily used by traditional and retail businesses, and a course on People Analytics from Wharton showed some ways this area of analytics could be used to improve our businesses. While people analytics is often the domain of HR professionals, there are valuable elements for managers across tech businesses (many of whom do not have robust HR teams). Below are some of the most important takeaways from the course.

Identifying the noise and improving performance evaluations

A critical role for any leader or manager is accurately evaluating performance of your employees. Accuracy is important to ensure you provide useful feedback that helps people improve, assists you in putting the right people in the correct roles and identifies the skills needed for success in specific functions.

The fundamental challenge in performance evaluation is that performance measures are very noisy. There is a range of outcomes possible outside of the employee’s control. The challenge is separating skill and effort from luck so that you understand true performance.

In the course, the instructors highlight how often people confuse skill with luck. They start with an example from sports, showing that professional American football teams ability to draft (select out of university) players is almost primarily luck. While some teams have had a string of success, success in one year has no predictive ability on success in future years. If skill were a key factor, then you would expect a team to repeat its success.

It also holds true with investment analysts. An analyst who has a great year is no more likely to have above market results the next year than one of the poorest performing analysts.

There are many reasons we confuse this luck with skill:

- Interdependence. I have found a humbling amount of work depends on other people, if they are great we look great, if they are not, we look bad. You should not attribute individual performance to something that is at the group level. In these cases, performance should be evaluated as a group. Conversely, reliable individual evaluation requires seeing people on other teams (for example, Tom Brady’s play on the Buccaneers will help assess whether his performance was due to him or the environment).

- Outcome bias. We tend to believe good things happen to those who work hard and judge by outcome, not by process.

- Reverse causality. When we see two correlated factors, we tend to believe one caused the other. In reality, one there may be no causality or it may be in the other direction. This leads us to see things that do not exist and can prompt us to give people undeserved credit or blame. One example cited in the course was research that showed charisma did not impact whether a CEO was successful, but successful leaders were considered more charismatic.

- Narrative seeking. We want to make sense of the world and tell a causal story.

- Hindsight bias. Once we have seen something occur, it is hard to anticipate we did not see it coming. We rewrite the history in our minds the history of the process.

- Context. We tend to neglect context when evaluating performance. We over attribute performance to personal skills and under attribute it to environmental factors such as the challenge of the problem the employee faced, quality of their team, etc. In psychology, this issue is referred to as the Fundamental Attribution Error, blaming or crediting personality traits to situational traits.

- Self-fulfilling prophesies. People tend toward performing consistent with expectations. High expectations increase performance, low expectations decrease performance

- Small samples. Small samples lead to greater variation, what we see in a small sample may not be representative of a large population.

- Omitted variable bias. There could be an additional reason that is driving both what the performance and what we think is causing the performance. For example, we may think higher compensation is leading to better performance. The truth might be that extra effort is causing both higher compensation and superior performance, thus the key variable (effort) had been omitted.

When you are looking at evaluating performance, there are several tools to improve your accuracy. You need to focus on the process the employee (or potential employee) took rather than only the outcome; we normally omit almost 50 percent of the objectives that we later identify as relevant to success. Thus, you should look at a much broader set of objectives that impact the business. This process includes determining what increases the likelihood for superior performance, rather than traditional outcomes are there four or five things that may not be obvious but contribute to overall success. A few years ago, I wrote how one basketball player (Shane Battier) was much more valuable than many players who scored more points or otherwise had flashier statistics, the same holds true in traditional business.

You need to look carefully at the job and understand what drives success. Define success not only by outcomes but how well these factors predict other KPIs, attrition, rate of promotion, etc. In the course, they also point out what works for one role or company does not necessarily work for others. Google found that GPA was an awful predictor of performance, but for Goldman Sachs it is the gold standard of who will be successful.

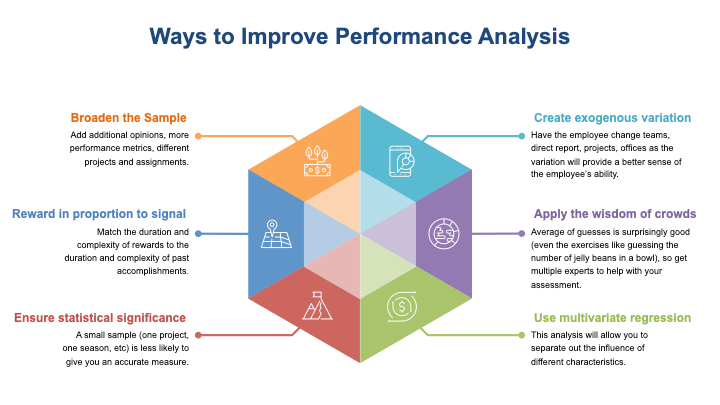

Additional ways to improve performance evaluation include:

- Broaden the sample. Add additional opinions, more performance metrics, different projects and assignments. The key is to use diverse, uncorrelated signals.

- Find and create exogenous variation. The only truly valid way to tease out causation is to control an employee’s environment. Have the employee change teams, direct report, projects, offices as the variation will provide a better sense of the employee’s ability.

- Reward in proportion to the signal. Match the duration and complexity of rewards to the duration and complexity of past accomplishments. For short, noisy signals it is better to give bonuses and praise rather than raises and promotions.

- The wisdom of crowds. Average of guesses is surprisingly good (even the exercises like guessing the number of jelly beans in a bowl), so get multiple experts to help with your assessment. Ensure, though, that their predictions are independent of each other (they are not talking to each other, they do not have the same background, etc).

- Ensure statistical significance. A small sample (one project, one season, etc) is less likely to give you an accurate measure.

- Use multivariate regression. This analysis will allow you to separate out the influence of different characteristics.

At the end of the day, you need to separate the signal from the noise to evaluate current performance and predict future success. Someone may have had a great performance or year but they may be a less valuable future employee than someone else because of luck or other environmental factors.

Recruiting the right people

Evaluating performance is not only important for your current team but also recruiting the best new hires. Hiring the wrong person can have huge consequences, including missed growth opportunities, damaging your culture and decreased output. Yet, most companies find consistently recruiting the right people difficult. This is often caused by the Illusion of Validity, that we think we know more about people than we actually do. We interview somebody and believe we can judge his or her suitability for a job. This Illusion is popped by research that shows the correlation of several hiring tools to subsequent performance (Ranked from most effective to worst:

- Work samples.

- Cognitive ability tests (these are general intelligence tests).

- Structured interviews.

- Job knowledge tests.

- Integrity tests.

- Unstructured interviews.

- Personality tests.

- Reference checks.

Several of the low scoring tools reinforce the Illusion of Validity. Unstructured interviews, where you meet someone and get a sense of their strengths and weaknesses, is often the paramount driver for whether we hire a candidate, but we are not good judges of character. I remember reading when President Bush first met Russian President Putin in 2001, he said “I looked the man in the eye. I found him to be very straight forward and trustworthy.” We see how well that worked out. As the above research also shows, reference checks are even more ineffective in the hiring process for similar reasons.

What does work is seeing examples of their previous relevant work, intelligence tests and structured interviews. Structured interviews are one designed to assess specific attributes of a candidate.

Use analysis for internal promotions

As well as improving the hiring process, People Analytics can help move the right people internally into the right roles. Often, people are promoted based on having done a great job in their current role. The course shows, though, that this approach often leads to negative outcomes (both for the employee and the company). The skills needed to succeed in the next job may not be the same skills that led to success in the current job. Performance in one job is not automatically a predictor of performance in a new role.

Just as it is important to understand the key predictors of success when recruiting, you need to do the same with internal promotion. Understand what leads to success in the new role and hire internally (or externally) those most likely to succeed. The good news is that research has shown that people promoted performed better overall than new hires into comparable roles.

Reducing employee churn

Attrition is one of the costliest problems company’s face and People Analytics can help combat this problem. The expense of losing an employee includes hiring a replacement, training costs, loss of critical knowledge and the impact on customer relationships. People analytics offers help in mitigating this problem. You should start by analyzing the percent turnover at specific milestones (3 months, 6 months, 1 year, etc.) and evolve into using multivariate regressions to predict who will reach each milestone. As you get more sophisticated you can build a survival model to understand over time what proportion will stay with your company. And then finally look at a survival/hazard rate model to test what factors accelerate the risk of exit.

During the course, they also provided some interesting data on why people leave. The decision to quit is most commonly driven by external factors, comparing the current job to a new opportunity. This understanding is critical as internal factors do play a role, internal issues still have a relatively small relationship to how likely people are to churn.

To reduce churn over time, the instructors of the course suggest an informed hiring strategy (where predicting churn is integrating into who is hired) and target interventions (reduce factors that accelerate risk of exit, address unmet needs, focus retention efforts, etc).

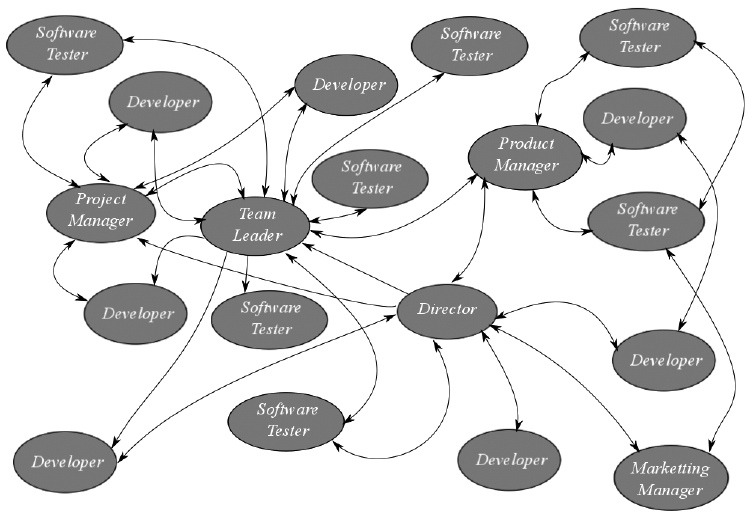

Using network analysis to improve collaboration

Another great takeaway from the course was how to use network analysis to understand, improve and incentive collaboration. Without getting too granular, network analysis involves looking at the informal links between employees, who gets information from who and what direction(s) that information is flowing. Once you draw that map, you can understand who are central to communications, who are outside the map, areas for improvement and people who should be rewarded for their role in collaboration.

While there are many details to creating and analyzing a network, there are five key areas to focus on when looking at individuals (there are no right and wrong answers for each attribute, optimizing depends on the goal and environment):

- Network size. How many people are they connected to.

- Network strength. How important and often are the lines of communication.

- Network range. How many different groups are they connected to. Range would be small if you are connected to everyone on your team even if it is a big team, large if you are connected to one person at every other corporate function (i.e. marketing, accounting, analytics, etc.)

- Network density. Are the connections connected to different people or to each other.

- Network centrality. Is everyone equally central or are there some in the middle and others on the fringes.

Understand how your company’s network works will allow you to understand collaboration patterns. For example, by deconstructing performance, you can understand if collaboration patterns impact performance. If there is a positive causal relationship, you can work to replicate or improve these relationships. If there is no relationship, your team might be wasting time on unnecessary collaboration.

You can use this analysis to understand if collaboration is needed and where. Then you can strategically build ties and bridges between different parts of the organization. This result can be achieved with:

- Cross-functional meetings.

- Conference calls or video conferences

- Job rotations

- Site visits

- Events

You should also identify where collaboration is unnecessary or overly burdensome and reduce demands on people. Match overloaded people with well-regarded employees who are under-utilized, who can relieve some of the burden. Also identify a small number of new connections that would have the biggest positive impact on team connectivity and shift responsibilities more evenly across members.

Tying performance evaluation with collaboration

People analytics can be particularly helpful connecting the performance evaluation methods discussed above with analysis of collaboration. As I wrote earlier, the key to good performance reviews is understanding what drives the outcomes you are looking for. If collaboration is one of those success drivers, you need to evaluate it thoroughly and incorporate into performance reviews and internal promotions (you do not want to promote someone weak at collaboration into a role where it is vital to success).

You should revise your evaluation systems to include collaboration. First, this will provide incentive to employees to build and use meaningful relationships. Second, it will recognize team members who help others win new clients or serve current customers, even if those direct results accrue to someone else (the basketball player who passes the ball rather than dunks).

To achieve this goal, you need to have the right measures. If you are assessing individual collaboration, you need to look at elements the individual controls. You then need to make sure there is reliability, which are the assessments will remain consistent over time and across raters. Third, the measures must have validity (accuracy). There also needs to be comparability, you need to be able to use the measures to look at all people who you are evaluating. Finally, it must be cost effective, it should not be too expensive to collect the information.

Key takeaways

- You need to align performance evaluations with the underlying factors that create success; deconstruct what leads to the outcomes you want and then assess people on those factors.

- Some common problems when evaluating people include context (attributing results to a person when the environment drove success or failure), interdependence (assessing on an individual level a result that was driven by a team), self-fulfilling prophecies (people perform consistent with expectations) and reverse causality (we attribute causality to correlation, even though the factors may not be related or may be in the other direction).

- You should assess how your team or company works as a network, looking at the relationships, and then encourage and grow ones that lead to desired outcomes.